Modern GPUs accelerate both 2D and 3D operations. As a fantasy, if we could have one or more compute unit per pixel on the screen, things would be crazy fast.

Since graphics are fundamentally a parallel problem, there is not yet a practical limit as to how many cores can be utilized at once.

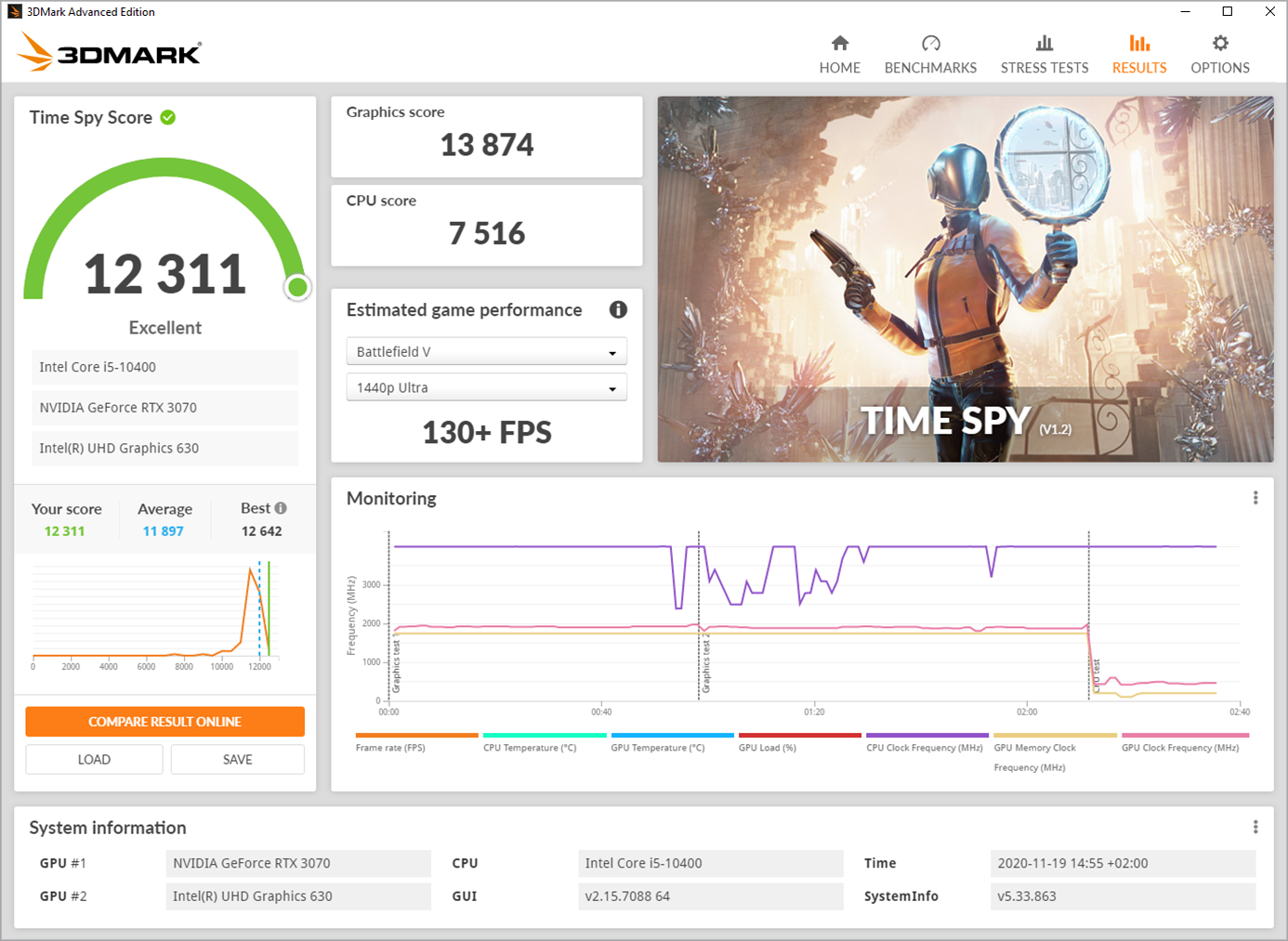

And since they can also be used to calculate physics (movie) as well, the GPU can be involved in nearly every aspect of computer graphics. It is important to understand that there are inefficiencies related to managing a multi-GPU setup, which is why the performance is never linear, or directly proportional, to the number of GPUs.Įach green square represents a compute unit of the GPU (courtesy of NVIDIA)īesides computing pixel colors, GPUs also move geometry around, or define the curvature of surfaces to be rendered, thanks to tessellation capabilities. At this point, it is possible to add many cards with one or more GPUs on a PC. This time, SLI was rebranded Scalable Link Interface.

The same SLI name was later used by NVIDIA which owns the 3DFX intellectual property. 3DFX’s multi-GPU brand was called SLI (Scan Line Interleave) which described how the rendering was split across two cards. This is achieved by adding more compute units to a single chip, or by adding many chips into a computer with a multi-GPU setup.Ī consumer-level GPU like the 3DFX Voodoo had one compute unit and one texture unit, while today’s fastest GPUs feature thousands of units (the Titan Z card has 5760 GPU cores ), and achieve performance levels that are thousands of times higher.Ĭonsumer-level Multi-GPU became popular thanks to the 3DFX Voodoo 2, and sources inside 3DFX once said that 30% of their customers were buying a second card to double the graphics performance. It is therefore possible to scale performance by adding more compute units. Since each pixel on the screen can (mostly) be worked-on independently from one another. Note that the definition of GPU Cores vary greatly from one vendor to the next, so this is not a number that should generally be used to compare GPUs from different brands.Ĭomputer graphics is fundamentally an “embarrassingly” parallel-problem, which means that the workload can easily be spread across multiple compute units. On the outside, GPUs look just like another chip, but inside, there is an array of dozens, hundreds or thousands of small computing units (or “GPU Cores”) that work in parallel, and this is essentially why GPUs are so much faster than CPUs to compute images: hundreds of small computing cores get the job done faster than a handful of big cores. Such a processing unit often describes a chip or a sub-unit of a larger chip called “system on chip”, aka SoC. GPUs were originally designed to accelerate graphics operations, thanks to specialized hardware which operates at a much faster rate than general purpose processors called Central Processing Unit, or CPU. AMD uses the term VPU (pdf link), which means Visual Computing Unit.

The GPU acronym stands for Graphics Processing Unit (or graphics processor), a term which was coined by NVIDIA in 1999 when it released its GeForce 256 “GPU”.

0 kommentar(er)

0 kommentar(er)